Precision concurrency control with River Pro

Job queues usually focus on achieving maximum throughput and concurrency. Yet many use cases require precisely the opposite—controlling and limiting concurrent execution to protect external systems or to ensure fairness.

Since launching River, concurrency control has been a frequent request, particularly among River Pro customers. For example, consider an external API with strict concurrent request limits. On a single node, handling this is straightforward—just configure MaxWorkers. But in production, across multiple nodes, ensuring global limits becomes complex and often leads to external locking mechanisms or throttling, impacting queue throughput and complicating the system.

Today, we’re excited to announce Concurrency Limits in River Pro to help customers easily manage these scenarios.

Queue-level global and local limits

Concurrency limits are an opt-in feature for Pro customers. They’re enabled by adding a queue to the ProQueues list in the riverpro.Config:

&riverpro.Config{ ProQueues: map[string]riverpro.QueueConfig{ "expensive_actions": { Concurrency: riverpro.ConcurrencyConfig{ GlobalLimit: 10, LocalLimit: 1, }, MaxWorkers: 1, }, },}This configuration ensures no more than 10 jobs run concurrently in this queue across your entire infrastructure (GlobalLimit), while each node is restricted to processing only one (LocalLimit).

Granular partitioning

Sometimes, a global queue limit isn’t enough—for instance, to prevent individual customers or job types from monopolizing resources. River Pro supports granular partitioning by job arguments, such as customer_id, or by job kind, enabling precise control:

&riverpro.Config{ ProQueues: map[string]riverpro.QueueConfig{ "one_per_customer": { Concurrency: riverpro.ConcurrencyConfig{ GlobalLimit: 1, Partition: riverpro.PartitionConfig{ ByArgs: []string{"customer_id"}, ByKind: true, }, }, MaxWorkers: 1, }, },}This example sets a global limit of one concurrent job per customer and per job type, preventing busy customers from overwhelming others.

Operational control in River UI

Concurrency limits are configured prior to starting a River client and can’t be directly modified while it’s running. Real-world scenarios, however, demand flexibility. What if a third party API provider starts having issues, and you need to throttle outbound requests to them temporarily?

Adjusting configs or restarting services during an incident can be risky and time-consuming, as many of us have learned first-hand.

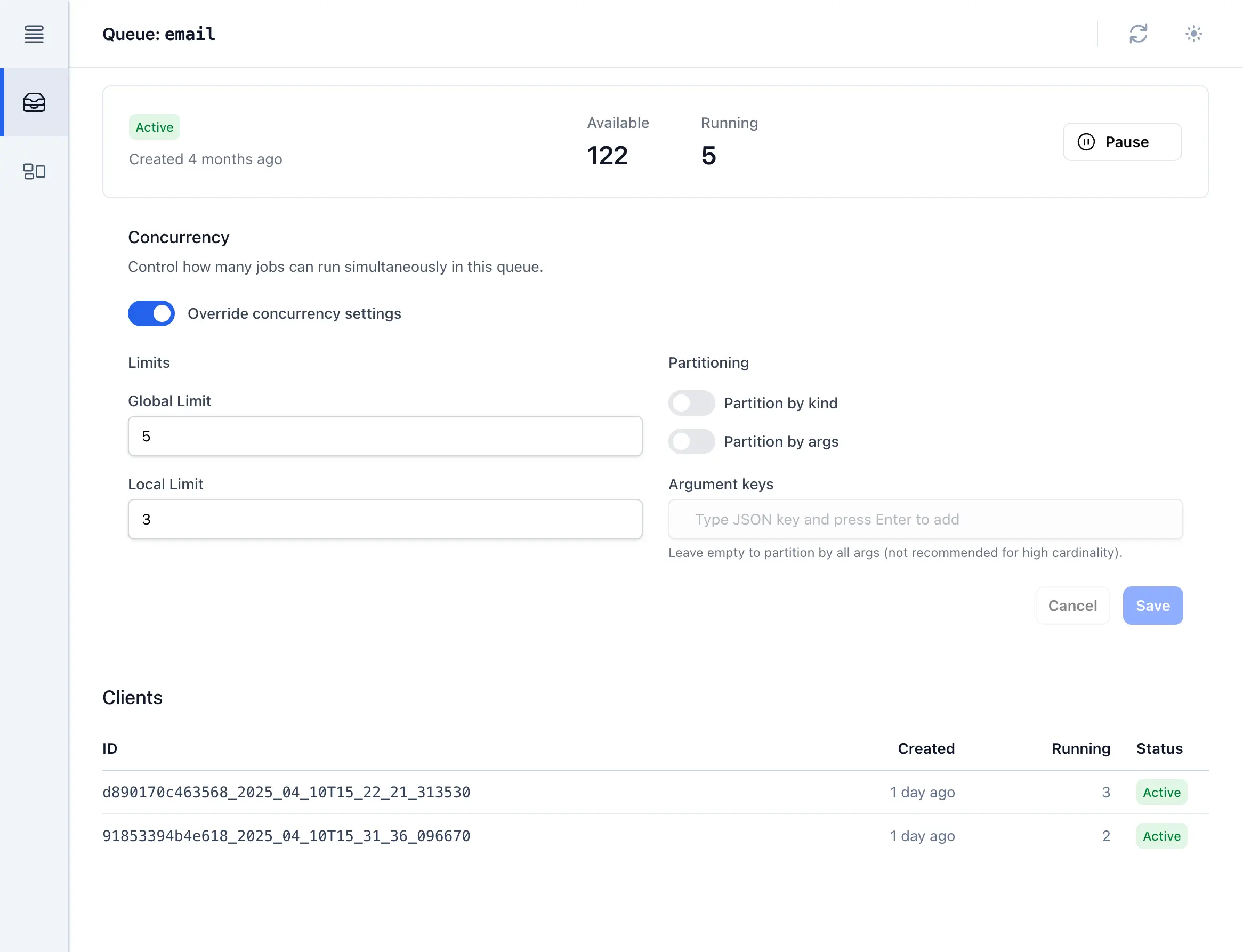

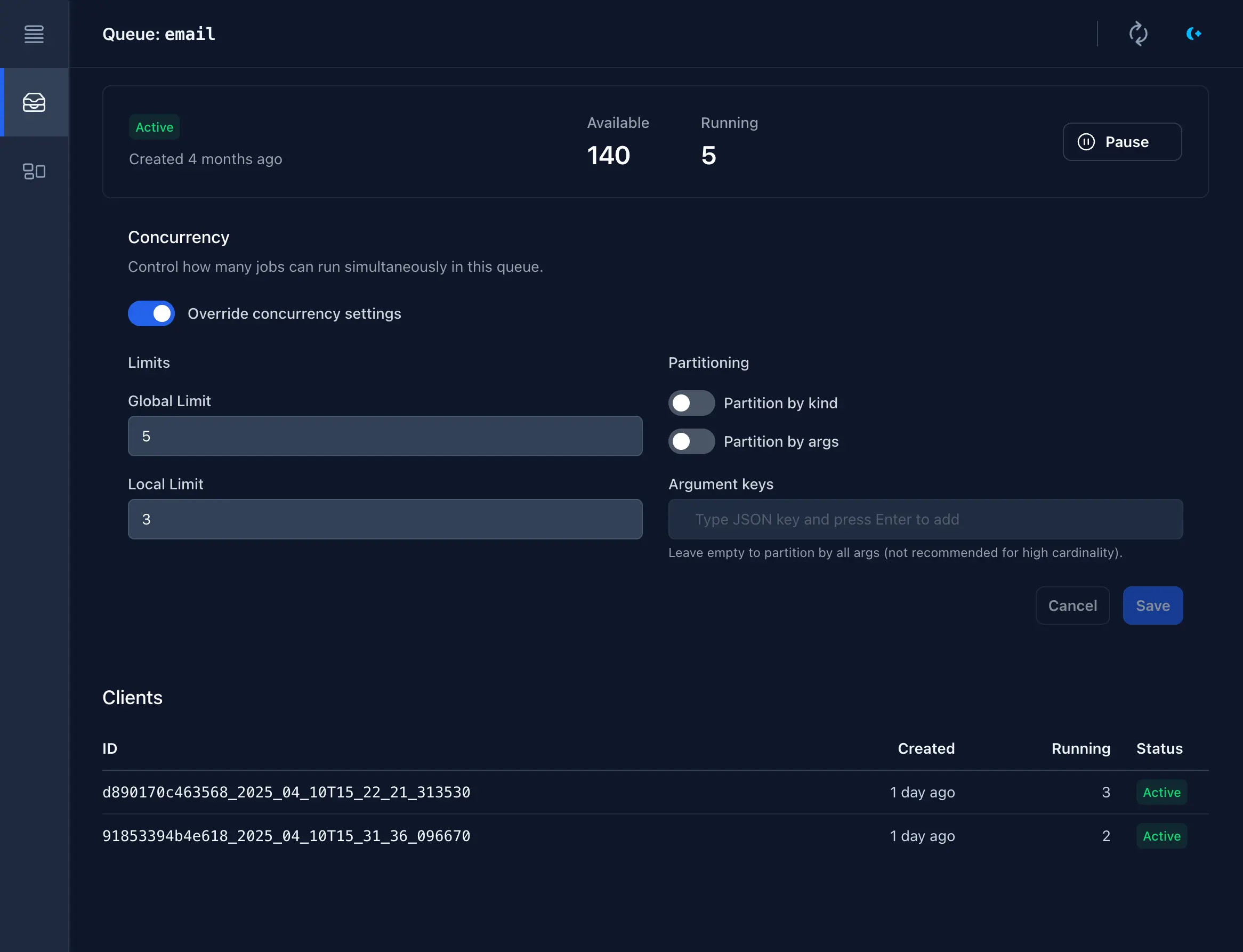

This is why we’ve made concurrency limits instantly overridable from River UI:

Most clients will pick up new limits ~instantly, while those in PollOnly mode will pick it up within a few seconds. As an added bonus, even regular queues not configured as ProQueues can be overridden in the UI, providing an important operational lever when you need it most.

For Pro customers, the new queue detail page also shows a real-time view of how many jobs each of their clients is running on that queue. This feature builds on the architecture that powers concurrency limits.

Try it out for yourself in our demo installation of River UI!

Composable with workflows and more

River Pro’s concurrency limits integrate seamlessly with existing features, including workflows. Tasks within workflows inherit the limits of whichever queue they’re added to, providing granular and flexible control over job processing. Individual tasks can be added to queues with strict concurrency limits, even as the remainder of the workflow runs without limits.

What’s next for River

Concurrency limits represent a major improvement to River Pro, laying the groundwork for other upcoming features including global rate limiting and improved detection of dead clients.

We’ve also shipped other new features in River and River Pro and will be telling you about them in the coming weeks. Stay tuned for more announcements, and sign up for occasional updates if you haven’t yet.